A team of researchers at the Fraunhofer Institute for Digital Media Technology has developed an innovative prototype known as the “Hearing Car,” designed to improve road safety for autonomous vehicles. By equipping cars with external microphones and artificial intelligence, the project aims to enable vehicles to detect, localize, and classify environmental sounds, thereby enhancing their ability to respond to hazards that are not visually detectable.

The initiative seeks to address critical safety concerns, particularly the need for vehicles to recognize approaching emergency vehicles and other potential dangers, such as pedestrians or mechanical failures. Moritz Brandes, a project manager for the Hearing Car, emphasizes the importance of integrating this auditory capability: “It’s about giving the car another sense, so it can understand the acoustic world around it.”

Testing the Hearing Car

In March 2025, researchers undertook an extensive test drive, covering 1,500 kilometers from Oldenburg to a proving ground in northern Sweden. This journey allowed the team to evaluate the system’s performance under various challenging conditions, including dirt, snow, and freezing temperatures.

The team focused on addressing several key questions about the functionality and durability of the system. One concern was how dirt or frost accumulation on the microphone housings could affect sound localization and classification. Initial tests indicated that performance degradation was minimal when the modules were cleaned and dried, and they confirmed that the microphones could withstand a car wash.

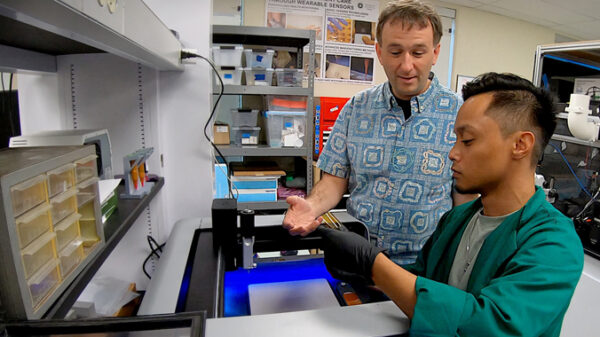

Each external microphone module (EMM) comprises three microphones housed in a 15-centimeter package, positioned at the rear of the vehicle to minimize wind noise. These microphones capture audio, digitize it, and convert it into spectrograms, which are subsequently analyzed by a region-based convolutional neural network (RCNN) trained for audio event detection. For instance, if the system identifies a siren, it cross-references this with the vehicle’s camera data to confirm the presence of a flashing light, thereby reducing the likelihood of false positives.

Audio localization is achieved through beamforming techniques, although specific details about this method have not been disclosed. All processing takes place onboard to ensure minimal latency and to avoid issues related to poor internet connectivity or interference from radio frequency noise. According to Brandes, the system’s computational demands can be met by a modern Raspberry Pi.

Early benchmarks indicate that the Hearing Car can detect sirens from up to 400 meters away in quiet, low-speed conditions, although this range decreases to under 100 meters at highway speeds due to increased ambient noise. The system can trigger alerts within approximately two seconds, allowing adequate time for drivers or autonomous systems to respond.

Evolution of Auditory Technology in Vehicles

The development of the Hearing Car is rooted in over a decade of research. Brandes notes, “We’ve been working on making cars hear since 2014.” Initial experiments involved simple tasks, such as detecting a tire puncture based on the rhythmic sound produced by a nail on the pavement. Support from a Tier-1 supplier accelerated the transition to automotive-grade development, with a major automaker joining the effort.

The growing adoption of electric vehicles (EVs) has underscored the necessity of enhancing auditory capabilities in cars. Eoin King, a mechanical engineering professor at the University of Galway, highlights the fundamental difference between human and machine perception: “A human hears a siren and reacts—even before seeing where the sound is coming from. An autonomous vehicle has to do the same if it’s going to coexist with us safely.”

King’s insights reflect a significant shift in automotive technology, moving from traditional physics-based methods to advanced AI applications. He notes, “Machine listening is really the game-changer,” emphasizing the potential for AI to revolutionize how vehicles perceive their surroundings.

Looking to the future, King expresses a cautious optimism regarding the widespread adoption of auditory technology in vehicles. He anticipates that while initial deployments will likely appear in premium vehicles or autonomous fleets, mass adoption may still be several years away. “In five years, I see it being niche,” he observes, referencing the gradual integration of lane-departure warnings into mainstream vehicles.

Both Brandes and King agree that combining multiple sensory inputs is crucial for the safety of autonomous vehicles. King points out that relying solely on visual data limits a vehicle’s awareness to its line of sight. “Adding acoustics adds another degree of safety,” he concludes.

As the Fraunhofer team continues to refine algorithms with broader datasets, the potential applications for the Hearing Car are vast. The researchers are also exploring sound detection in indoor environments, which could be adapted for automotive use. While some advanced scenarios may take years to realize, the underlying principle remains clear: with the right data, the future of auditory perception in vehicles holds significant promise.