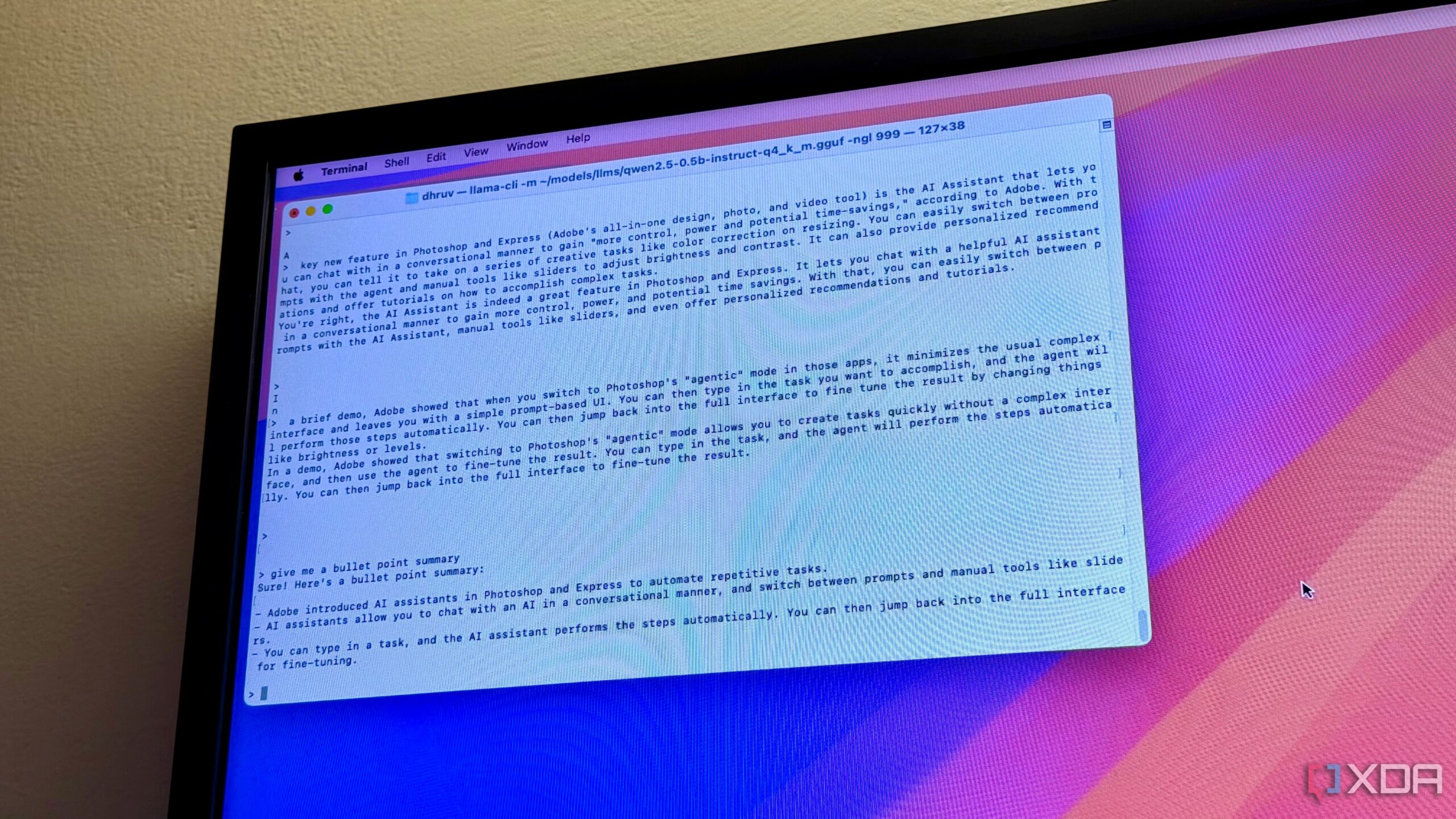

URGENT UPDATE: A growing number of users are abandoning popular local AI tools LM Studio and Ollama in favor of the new terminal-based solution llama.cpp. This shift highlights a pressing demand for enhanced control, efficiency, and speed in running local AI models.

As of October 2023, enthusiasts and developers have begun reporting significant improvements in performance and flexibility with llama.cpp. While LM Studio and Ollama are user-friendly options for beginners, many are discovering that these GUI-based tools come with trade-offs that limit their capabilities. Users are now seeking more direct access to their AI models, prompting this rapid transition.

The appeal of llama.cpp lies in its ability to strip away unnecessary layers, offering a leaner, faster experience. Users have noted that startup times are noticeably quicker and resource utilization is optimized. This means that even those with lower-powered hardware can leverage AI technology without compromise. For instance, llama.cpp’s efficiency allows it to run seamlessly on devices like Raspberry Pi or less powerful computers, setting it apart from its GUI counterparts.

For many, the command-line interface initially presents a steeper learning curve. However, the rewards are clear: users gain complete control over memory allocation and model performance. The ability to quantize models directly on-device allows for tailored performance, making it a game-changer in the local AI landscape.

A user shared,

“Switching to llama.cpp has transformed my AI experience. The control and speed are unmatched, and I feel empowered to customize my setup exactly how I want it.”

This sentiment echoes a growing trend among developers who yearn for flexibility in their AI operations.

Additionally, the open-source nature of llama.cpp means that developers can integrate it directly into their applications and workflows without the constraints of proprietary software. This freedom is particularly appealing to those looking to automate tasks across their systems or build custom solutions.

The transition to llama.cpp is not just a technical upgrade; it’s a significant shift in how users interact with AI. As the community becomes more aware of the limits of GUI tools, expect this trend to accelerate. The combination of speed, control, and open-source advantages positions llama.cpp as a formidable choice for anyone serious about local AI development.

In summary, the move from LM Studio and Ollama to llama.cpp reflects a broader shift in the tech community towards more efficient and customizable solutions. As this trend continues to grow, users are encouraged to explore the capabilities of llama.cpp and experience the benefits firsthand. Keep an eye on this developing story as more users make the switch and share their insights.