BREAKING: New insights reveal how to maximize the performance of self-hosted large language models (LLMs) even with limited VRAM. As LLMs become increasingly integrated into everyday technology, enthusiasts can now run powerful AI models from home without needing high-end GPUs.

JUST ANNOUNCED: Developers and tech aficionados can breathe new life into their older PCs with strategies that allow for effective LLM hosting. Recent tests show that running models like the 14B Qwen3 on hardware with just 8GB of VRAM is not only feasible but can deliver impressive results.

What does this mean for you? If you’ve ever wanted to harness the power of AI for personal projects, productivity, or simply to experiment, now is the time to act. With the right adjustments, even mid-range graphics cards like the RTX 3060 can effectively host complex models. This opens up accessibility to a broader audience eager to explore AI technology.

The critical factor here is VRAM, which is essential for running LLM tasks efficiently. Most desktop PCs fall short in this area, but techniques such as model compression, quantization, and pruning can significantly reduce memory requirements. By lowering the precision of model weights, quantization can decrease memory usage by up to 20% for large models, making it possible to run them on systems with as little as 8GB of VRAM.

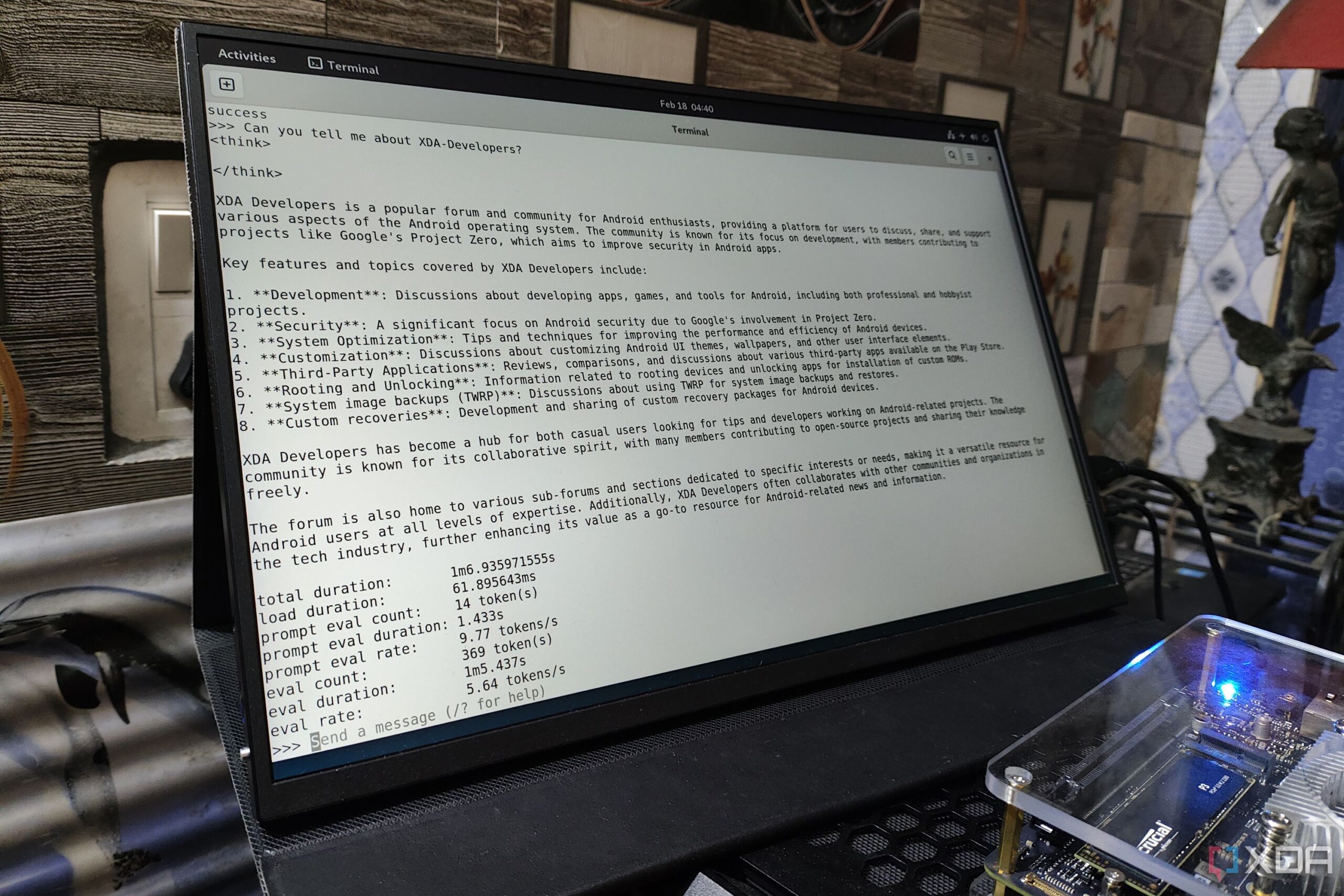

As a practical example, with just an RTX 3060, I successfully ran the 14B Qwen3 model using Q4 quantization. Typically, this model would require double the VRAM, but with careful management, it only consumed 10GB, including some spillover into RAM. This demonstrates that with the right setup, you can achieve significant results without breaking the bank on expensive hardware.

Moreover, utilizing lightweight frameworks such as Ollama allows for optimized resource usage, enabling users to run LLMs efficiently on both CPUs and GPUs. The ability to quickly download and switch between models means you can find the best fit for your needs without the hassle of lengthy setup processes.

For those still on the fence, now is the time to dive in. With the growing demand for AI capabilities, being able to run LLMs from home can enhance productivity and open new avenues for creative projects. Don’t let limited hardware hold you back—take advantage of model efficiencies and discover the potential of AI in your own workspace.

In summary, the landscape for self-hosted AI is rapidly evolving. With effective techniques to mitigate VRAM limitations, anyone can harness the power of LLMs from their home setup, making this an exciting time for tech enthusiasts worldwide. Keep exploring, experimenting, and sharing your findings as this technology continues to develop.