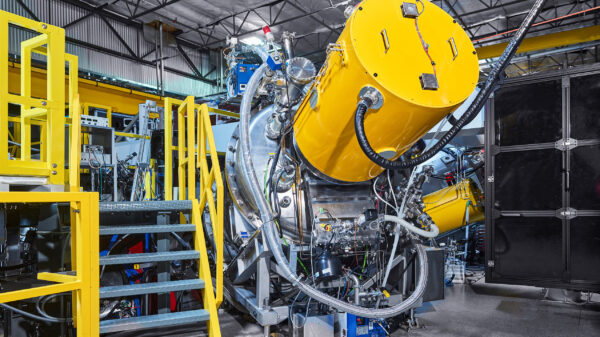

UPDATE: Nvidia Corp. has just announced a bold claim, declaring its latest graphics processing units (GPUs) are a full “generation ahead” of Google’s custom silicon efforts in the rapidly evolving AI landscape. This significant statement, reported by CNBC, signals an urgent shift in the competitive dynamics of the tech industry, intensifying the race between leading AI infrastructure providers.

As Big Tech companies like Google and Amazon invest billions to reduce dependency on Nvidia’s chips, the relationship has become increasingly fraught. Historically, these companies have purchased billions in Nvidia’s H100 and Blackwell GPUs while simultaneously developing their proprietary accelerators. However, Nvidia’s recent assertions highlight a clear departure from mere coexistence, suggesting a fierce battle for supremacy in AI capabilities.

Nvidia’s confidence stems from its superior performance metrics, particularly in memory bandwidth and networking fabric, which Google’s Tensor Processing Unit (TPU) has struggled to replicate at scale. According to technical analyses shared on social media platforms, Nvidia’s Blackwell architecture significantly outperforms Google’s sixth-generation Trillium TPU, despite Google claiming a fourfold efficiency improvement over its predecessor.

Why This Matters NOW: The implications of Nvidia’s claim are profound. As AI models evolve into the trillions of parameters, the need for faster data communication between chips becomes critical. Nvidia argues that while Google’s TPUs excel in specific workloads, they lack the versatility required for cutting-edge generative AI training. This performance delta could reshape market strategies and investor sentiment, particularly as Nvidia aims to justify premium pricing on its hardware.

The urgency of the situation is compounded by diverging philosophies regarding capital expenditures. Google is investing heavily in TPUs to control costs, reportedly saving significantly by developing its chips. However, Nvidia emphasizes “time-to-intelligence,” arguing that its GPUs can train models significantly faster than Google’s offerings, meaning the opportunity costs could outweigh initial savings.

Furthermore, industry insiders reveal that both companies are subject to the same manufacturing bottlenecks at TSMC, underscoring Nvidia’s assertion that even if Google can design competitive chips, it cannot innovate faster than Nvidia’s dedicated R&D efforts. The tech giant warns that by the time Google produces a TPU to rival the H100, Nvidia will have already advanced its technology further.

Beyond hardware, Nvidia’s software ecosystem plays a crucial role in this contest. The CUDA platform remains the industry standard, even as Google promotes its own alternatives. Many enterprises prefer Nvidia GPUs due to established compatibility, creating hurdles for companies attempting to switch to TPUs.

Nvidia’s claims have not gone unnoticed on Wall Street. Analysts suggest that this assertive stance is aimed at protecting Nvidia’s historically high gross margins. If Google’s TPUs are perceived as adequate replacements, Nvidia’s pricing power may diminish. Conversely, if Nvidia’s performance claims are validated, it could further entrench its market dominance.

Despite a competitive atmosphere, experts predict a heterogeneous future in data centers. Nvidia’s premium GPUs will likely handle demanding AI tasks, while Google’s TPUs may serve routine processing needs. The ongoing dialogue between these tech titans indicates a growing maturity in the AI industry, with a focus on sustainable infrastructure rather than just hype.

As Nvidia positions itself as the benchmark for AI hardware, it remains to be seen how Google’s ambitions will unfold. For now, Nvidia’s declaration serves as a critical reminder of the intense competition in the AI space, with implications for developers, enterprises, and investors alike.

Stay tuned for further developments as the AI arms race continues to escalate.