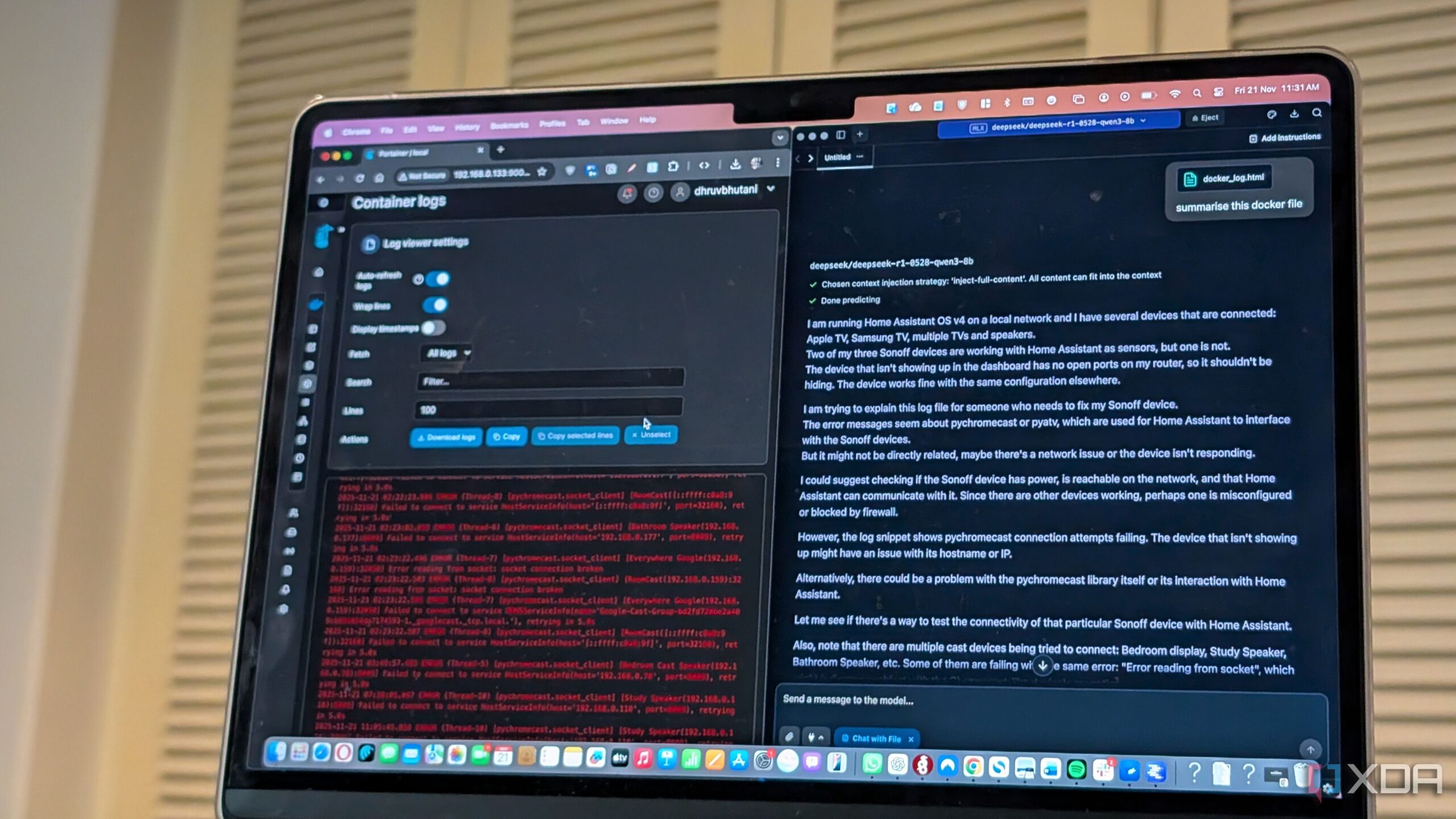

URGENT UPDATE: A local Large Language Model (LLM) is revolutionizing home lab management by generating nightly summaries, streamlining monitoring, and enhancing diagnostics for users. This innovative approach addresses the chaotic nature of self-hosted environments, providing crucial insights without the need for constant log scrutiny.

In the fast-paced realm of tech enthusiasts, the demand for efficient home lab management is skyrocketing. Users like those implementing Docker setups experience daily disruptions, with containers crashing and services failing at unpredictable times. As a result, many find themselves overwhelmed by the sheer volume of logs, often left unaware until issues escalate.

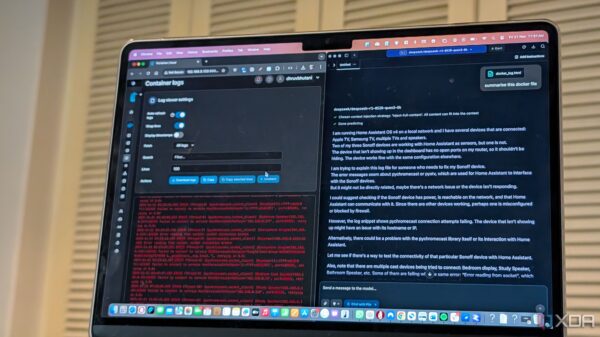

Just announced, this local LLM offers a solution: nightly log summaries that distill complex information into manageable insights. By reading through extensive logs, the LLM helps users identify patterns and potential issues before they escalate into significant failures, ensuring uninterrupted operation of essential services.

For instance, one user reported that their Pinchflat container was misbehaving, but thanks to the LLM’s analysis, they discovered a misconfigured environment variable, making diagnostics remarkably straightforward. Without the LLM, such issues might have gone unnoticed until they became critical, risking data loss or service downtime.

The LLM’s capabilities extend beyond just Docker logs. Users running backup jobs on their NAS systems benefit significantly as well. These logs, typically overlooked due to their complexity, can now reveal potential failures before they occur. With the LLM’s nightly summaries, users gain awareness of any skipped files or connectivity issues, allowing for timely interventions.

This breakthrough comes at a time when the need for reliable home lab management has never been greater. As home automation and self-hosting grow in popularity, the ability to maintain smooth operations is essential. The LLM transforms long, indecipherable data streams into concise, human-readable reports, ensuring users stay informed without dedicating excessive time to log analysis.

The impact of these nightly summaries is profound. Users report increased confidence in their home lab setups, knowing they can address potential problems before they snowball. The summaries not only enhance operational efficiency but also deepen users’ understanding of their technology, bridging the gap between complex data and practical application.

Looking ahead, this development highlights the growing role of AI in everyday tech management. As more home lab enthusiasts adopt local LLMs, expectations for automated, intelligent solutions will continue to rise. The possibilities are vast, ranging from improved diagnostics to predictive maintenance, fundamentally changing how individuals interact with their technology.

As the technology landscape evolves, the integration of AI into personal computing and home labs stands as a testament to innovation and efficiency. Users are encouraged to consider implementing a local LLM to enhance their own setups, ensuring they remain at the forefront of this exciting digital transformation.

Stay tuned for more developments as this trend gains momentum in the tech community, promising a future where home lab management is streamlined and more effective than ever.