Recent advancements in large language models (LLMs) have led to the emergence of a new paradigm known as Context Engineering, which enhances the interaction between users and AI systems. Traditionally, prompt engineering was regarded as the primary method for engaging with LLMs. However, as these models have significantly improved their reasoning and understanding capabilities, expectations have shifted dramatically. Just last year, users were satisfied if ChatGPT could draft a simple email. Now, users seek more complex functionalities, such as data analysis, system automation, and pipeline design.

While prompt engineering focuses on crafting effective input for LLMs, it has become clear that this approach alone is insufficient for developing scalable AI solutions. Experts are now advocating for the integration of context-rich prompts, a practice referred to as Context Engineering. This method aims to provide LLMs with the comprehensive context necessary to generate precise and dependable outputs.

Understanding Context Engineering

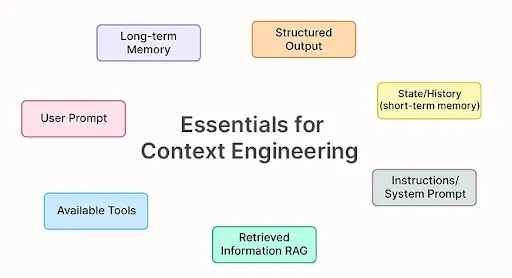

Context engineering is defined as the process of structuring the entire input given to a large language model to improve its accuracy and reliability. This involves optimizing prompts to ensure that an LLM receives all relevant context required to produce a response that aligns with the user’s needs.

At first glance, context engineering may appear to be synonymous with prompt engineering; however, the distinction is significant. Prompt engineering concentrates on formulating a single, well-structured input to guide the output from an LLM. In contrast, context engineering encompasses the broader environment surrounding the model, enhancing its output accuracy for intricate tasks. Essentially, context engineering can be understood as:

Context Engineering = Prompt Engineering + (Documents/Agents/Metadata/Retrieval-Augmented Generation)

The Components of Context Engineering

Context engineering comprises multiple components that extend beyond mere prompts. Key elements include:

1. **Instruction Prompt**: These system prompts set the tone for the model’s responses. For instance, if instructed, “You are an expert legal assistant. Answer concisely and do not provide medical advice,” ChatGPT will tailor its answers accordingly.

2. **User Prompt**: This represents the immediate task or question posed by the user, serving as the primary signal for generating a response.

3. **Conversation History**: By maintaining a record of previous interactions, the model can provide consistent answers. For example, if a user mentions their project is in Python, subsequent questions about database connections will likely receive responses in the same programming language.

4. **Long-term Memory**: This feature allows the model to remember user preferences and previous conversations. If a user previously identified as vegan, ChatGPT can offer suitable dining suggestions in future interactions.

5. **Retrieval-Augmented Generation (RAG)**: This enables the model to access real-time information from various sources, allowing it to deliver timely and relevant answers.

6. **Tool Definition**: This component ensures that the model understands how to execute specific functions. For example, when asked to book a flight, the model can utilize a tool to provide accurate options.

7. **Output Structure**: This involves formatting responses in a way that can be easily interpreted by other systems, such as JSON or tables.

The integration of these components shapes how an LLM processes input and generates output.

The Importance of Context-Rich Prompts

In today’s AI landscape, the use of LLMs is becoming increasingly common, and the efficacy of AI agents hinges on their ability to gather and deliver context effectively. The primary role of an AI agent is not merely to respond but to collect pertinent information and extend context before engaging the LLM. This may involve incorporating data from databases, APIs, user profiles, or past interactions.

When two AI agents operate using the same framework, the key differentiator lies in how they engineer their instructions and context. A context-rich prompt ensures that the model comprehends not only the immediate question but also the broader goals, user preferences, and essential external facts needed for accurate outputs.

For example, within the context of a fitness coaching agent like FitCoach, the difference between a well-structured prompt and a poorly structured one can lead to vastly different outcomes. A well-structured prompt would require gathering detailed information sequentially before generating a personalized fitness and diet plan. In contrast, a poorly structured prompt may yield a generic plan without considering the user’s specific needs, resulting in a less satisfactory experience.

Context engineering effectively transforms basic chatbots into sophisticated, purpose-driven systems capable of meeting complex user demands.

Strategies for Developing Context-Rich Prompts

To harness the power of context-rich prompts, developers must focus on four core skills: writing context, selecting context, compressing context, and isolating context.

– **Writing Context**: This involves capturing and storing relevant information during interactions, similar to how humans take notes. For instance, a fitness coach agent should record user details in real-time, ensuring that crucial facts are not overlooked.

– **Selecting Context**: It is essential for agents to retrieve only the relevant information when necessary. For example, when generating a workout plan, FitCoach should focus on details such as the user’s height, weight, and activity level while disregarding extraneous data.

– **Compressing Context**: In lengthy conversations, it may be necessary to summarize information to fit within the model’s memory constraints. A concise summary can enable the agent to retain key facts while still providing accurate outputs.

– **Isolating Context**: Breaking down information into distinct segments allows agents to tackle complex tasks more effectively. By delegating specific queries to specialized sub-agents, the overall process remains organized and efficient.

These foundational practices are critical for designing production-grade AI agents that deliver reliable and safe user experiences.

In conclusion, the shift from prompt engineering to context engineering marks a significant evolution in the development of AI solutions. As the capabilities of LLMs continue to grow, the necessity of constructing and managing comprehensive context systems becomes paramount. By implementing context engineering principles, developers can create robust applications that meet the demands of modern AI users while ensuring accuracy, safety, and efficiency.