In a significant advancement for data privacy, researchers from the School of Cyber Science and Engineering at Southeast University and Purple Mountain Laboratories have introduced a new scheme designed to enhance the security of neural network inference. The study, titled “Efficient Privacy-Preserving Scheme for Secure Neural Network Inference,” addresses the pressing issues surrounding data security in cloud computing environments.

As smart devices and cloud services proliferate, users increasingly rely on these platforms for data processing. This trend, however, comes with inherent risks. Transmitting sensitive information in plaintext can expose users to unauthorized access, raising concerns about both personal data and the security of intelligent model parameters. The researchers’ new scheme aims to protect user privacy while still allowing for efficient data processing.

Innovative Approach to Secure Data Processing

The proposed scheme utilizes a combination of homomorphic encryption and secure multi-party computation. This dual approach allows for secure processing without revealing sensitive data to the cloud servers. The study outlines three key innovations that enhance the efficiency of the inference process.

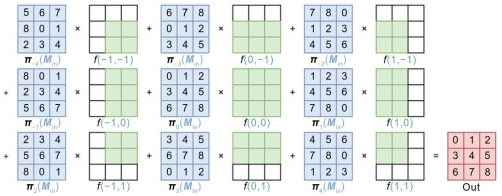

First, the inference operation is divided into three distinct stages: merging, preprocessing, and online inference. This structure streamlines operations, making the process more efficient. Second, a novel network parameter merging technique reduces the number of necessary multiplications while minimizing ciphertext-plaintext operations. Lastly, the researchers developed a fast convolution algorithm, which significantly boosts computational efficiency.

The scheme leverages the CKKS homomorphic encryption algorithm, which facilitates high-precision floating-point computations. By merging consecutive linear layers, the scheme reduces both the number of communication rounds and the overall computational costs. Additionally, it transforms convolutional operations into matrix-vector multiplications, optimizing the use of ciphertext “slots.”

Impressive Results and Comparisons

Extensive experiments were conducted using the MNIST and Fashion-MNIST datasets, demonstrating the scheme’s effectiveness. The results showed an impressive inference accuracy of 99.24% on the MNIST dataset and 90.26% on the Fashion-MNIST dataset.

When compared with leading methods such as DELPHI, GAZELLE, and CryptoNets, the new scheme showed substantial improvements. Notably, it reduced online-stage linear operation time by at least 11% and computational time by approximately 48%. Furthermore, it achieved a 66% reduction in communication overhead compared to non-merging approaches, underscoring its efficiency in convolution operations.

The research team, which includes Liquan Chen, Zixuan Yang, Peng Zhang, and Yang Ma, has made a significant contribution to the field of data security in neural networks. Their findings not only advance the technology but also provide a vital framework for protecting sensitive information in an increasingly digital world. For those interested in the full details, the paper can be accessed at https://doi.org/10.1631/FITEE.2400371.