Researchers have unveiled a groundbreaking optical computing method capable of executing complex tensor operations at the speed of light. This innovative technology, developed by a team led by Dr. Yufeng Zhang from Aalto University, has the potential to revolutionize artificial intelligence (AI) processing, significantly enhancing speed and reducing power consumption.

The newly developed technique, termed “single-shot tensor computing,” allows for the processing of data in a single pass of light. Tensor operations, essential for nearly all AI tasks today, often rely on traditional graphics processing units (GPUs). However, as data volumes increase, GPUs face limitations in terms of speed, power efficiency, and scalability. This need for a more advanced solution prompted Dr. Zhang and his international team to explore alternatives beyond electronic circuits.

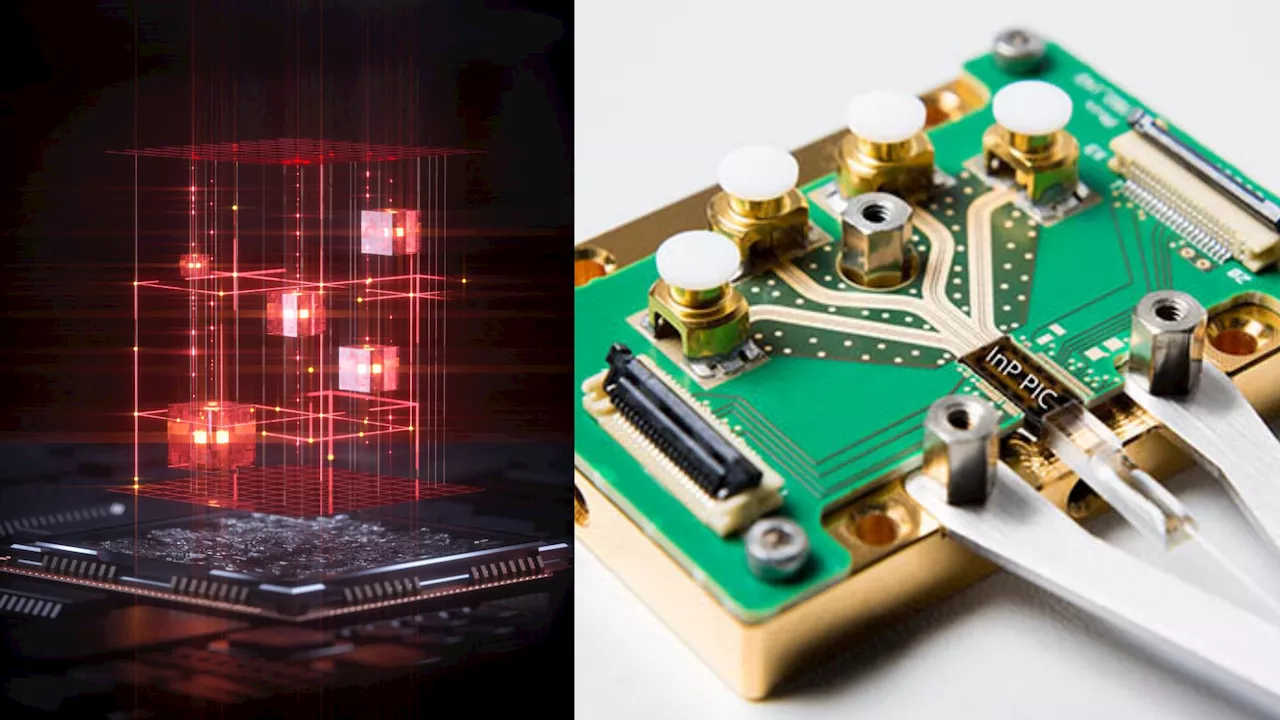

By utilizing the unique physical properties of light, the team has successfully encoded digital information into the amplitude and phase of light waves. As these waves propagate, they interact in a manner that replicates the mathematical functions typically performed by deep learning systems. Dr. Zhang stated, “Our method performs the same kinds of operations that today’s GPUs handle, like convolutions and attention layers, but does them all at the speed of light.” Notably, this system eliminates the need for electronic switching, allowing computations to occur naturally during light propagation.

Enhancing Computational Capabilities

The researchers further advanced their method by employing multiple wavelengths of light, with each wavelength acting as an individual computational channel. This enables the system to process higher-order tensor operations simultaneously. Dr. Zhang likened this process to the workings of a customs facility where parcels undergo various checks. “Imagine you’re a customs officer who must inspect every parcel through multiple machines with different functions and then sort them into the right bins,” he explained. This analogy illustrates how their “optical hooks” efficiently connect each input to its corresponding output in a single operation.

An additional benefit of this optical computing approach is its inherent simplicity. The interactions occur passively, without the need for external control circuitry to manage computations. This characteristic not only reduces power consumption but also facilitates easier integration into existing systems.

Professor Zhipei Sun, who heads Aalto’s Photonics Group, emphasized the versatility of the method across different optical platforms. “In the future, we plan to integrate this computational framework directly onto photonic chips, enabling light-based processors to perform complex AI tasks with extremely low power consumption,” he stated.

Pathway to Commercial Use

The research team anticipates that this technology will soon transition into commercial hardware. Dr. Zhang estimates that the integration of this optical computing method into platforms developed by major companies could occur within the next three to five years. They believe that such advancements could significantly enhance AI workloads across various sectors that rely on real-time processing, including imaging, large language models, and scientific simulations.

Furthermore, the optical approach promises reduced energy consumption, addressing a pressing concern as AI models continue to expand. “This will create a new generation of optical computing systems, significantly accelerating complex AI tasks across a myriad of fields,” Dr. Zhang concluded.

The findings from this research have been published in the journal Nature Photonics. This advancement not only marks a significant step forward in optical computing but also positions the technology at the forefront of next-generation AI processing solutions.