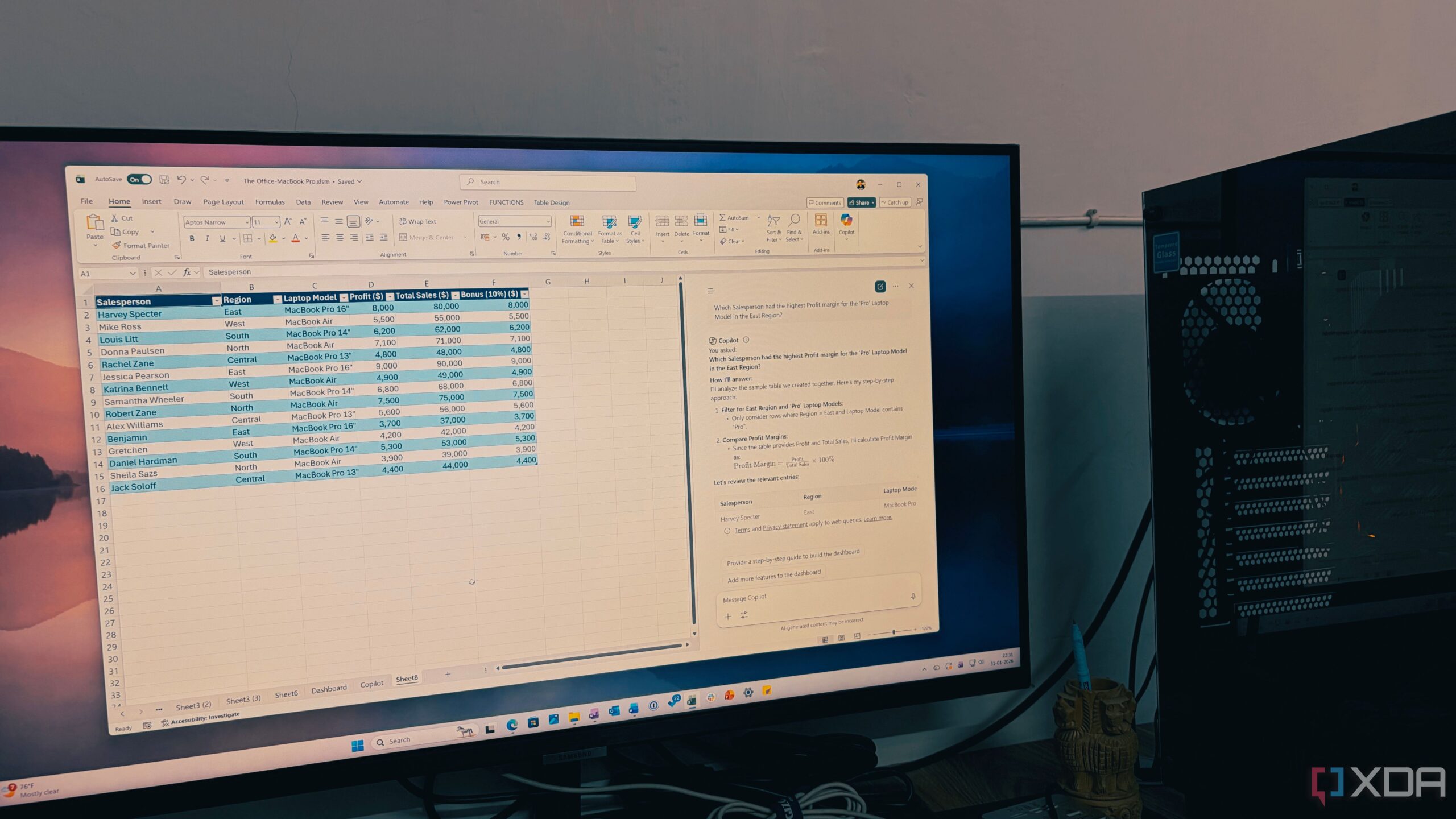

Tech journalist Pranav Dixit recently explored Google’s new “Personal Intelligence” feature, revealing the extent of personal data the company has amassed about its users. This feature, part of the Gemini platform and its AI search engine mode, allows Google to access a wealth of information, often in surprising and unsettling ways.

Upon using the feature, Dixit discovered that Google could retrieve details such as his license plate number and even his parents’ vacation history without direct inquiries. He described the experience as if Google had been taking notes on his life and finally handed him the notebook. This unsettling revelation highlights the depth of data that tech companies collect and maintain on their users.

Last week, Google rolled out the Personal Intelligence feature to subscribers of Google AI Pro and AI Ultra. Once activated, the AI can access users’ Gmail and Google Photos accounts. A more advanced version released earlier this month for the Gemini app delves even deeper, examining users’ Search and YouTube histories. Essentially, if you’ve used Google for any purpose, the AI is likely capable of retrieving that information.

This strategy is part of Google’s effort to maintain its competitive advantage in the AI landscape. Unlike rivals such as OpenAI, Google has decades of user data from billions of individuals. The company can infer extensive insights based on users’ search queries and the various confirmations and reminders found within their emails.

For many, the thought of allowing an AI to access such a vast amount of personal data can be alarming. Google has reassured users that it handles personal information with care. In a recent blog post, Josh Woodward, Google’s Vice President, emphasized that the company only trains its AI on user prompts and the responses generated, not on personal emails or photos. He stated, “We don’t train our systems to learn your license plate number. We train them to understand that when you ask for one, we can locate it.”

Despite these assurances, Dixit noted that accessing this data can create a genuinely useful and “scary-good” personal assistant. For instance, when he requested sightseeing suggestions for his parents, the AI accurately deduced that they had previously explored various hiking trails in the Bay Area. Instead, it recommended museums and gardens.

The AI drew these conclusions from various “breadcrumbs,” including emails, photos of past hikes, and even a Google search for “easy hikes for seniors.” It also identified his license plate number from stored images and referenced emails to confirm when his car insurance was due for renewal.

The implications of this feature extend beyond privacy concerns. As AIs become more adept at using personal data, they may develop a more human-like interaction style. This could lead users to perceive them as reliable companions, which raises significant mental health concerns. Dixit expressed his frustration with interactions with AI chatbots, stating that he would “pour my soul into ChatGPT and get a smart answer,” only for it to “forget I existed like a genius goldfish.”

As discussions about AI continue to evolve, the emergence of features like Google’s Personal Intelligence underscores the need for ongoing dialogue about privacy and the ethical use of personal data. While the technology promises enhanced user experiences, it also demands careful consideration of its societal impacts.