Researchers have increasingly recognized the importance of maintaining and updating their data, especially in fields like ecology. Since 1977, the Portal Project has studied the interactions among rodents, ants, and plants in Arizona, emphasizing the necessity of consistent data management. In recent years, the project has modernized its approach, allowing for the efficient preservation and updating of data sets.

Ethan White, an environmental data scientist at the University of Florida, has been involved with the Portal Project since 2002. He highlights that “data collection is not a one-time effort.” To ensure continuous updates while keeping previous versions accessible, in 2019, White and his team implemented a data workflow utilizing GitHub, Zenodo, and Travis CI, which has resulted in approximately 620 versions now stored in the Zenodo repository.

Despite the clear need for systematic data management, many researchers struggle to navigate the available options for updating their data. Crystal Lewis, a freelance data-management consultant based in St. Louis, Missouri, notes the lack of standardized guidelines. “There are no standards for repositories; the journals are not telling you how to correct a data set or how to cite new data, so people are just winging it,” she explains.

Choose the Right Repository for Your Data

Selecting an appropriate data repository is crucial for researchers. While personal websites or cloud storage may seem convenient, using a repository ensures that data is securely stored and easily accessible. Kristin Briney, a librarian at the California Institute of Technology, emphasizes this point, stating that repositories provide better long-term storage, often with multiple backups.

By the end of 2024, U.S. federal funding agencies will require researchers to deposit their data in recognized repositories, with some like the National Institutes of Health already enforcing this policy. Many journals also mandate the use of repositories, such as PLoS ONE, which recommends several general and subject-specific options, including the Dryad Digital Repository and Open Science Framework.

Repositories not only offer storage but also promise that archived data will remain unchanged, assigning a persistent identifier to each data set for easy retrieval. This ensures that researchers can find their data in the future, even as definitions and methodologies evolve.

Maintain Version Control for Transparency

Creating distinct versions of data sets is vital for both transparency and accessibility. Overwriting old data can hinder future analyses and obscure how data have evolved over time. Lewis emphasizes, “Three months from now, you will forget what you did — you will forget which version you’re working on.” By maintaining clear version control, researchers can avoid confusion and ensure reproducibility.

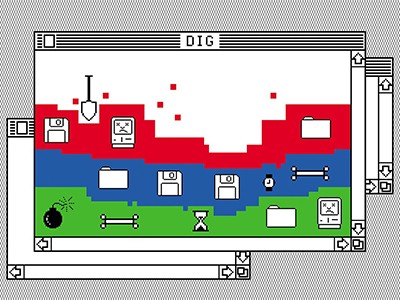

Many data repositories, including Zenodo, automatically create new versions when data is updated. Each version receives a unique digital object identifier (DOI), allowing researchers to cite specific iterations of their data easily. For those managing data outside a repository, platforms like GitHub facilitate the creation of new ‘releases’ whenever data is updated, while manual file naming conventions can also help delineate different versions.

Standardize File Naming and Document Changes

Effective data management extends beyond version control; it also involves establishing a clear file naming convention. Briney advises researchers to incorporate the date (formatted as YYYYMMDD or YYYY-MM-DD) into their file names. This practice not only aids in organization but also allows researchers to quickly identify related files.

Documentation is equally important. Researchers should provide metadata explaining variables used and the organization of data within files. This level of detail benefits both the original researcher and anyone else who may work with the data later. Sabina Leonelli, who studies big-data methods at the Technical University of Munich, emphasizes the importance of documenting the terminology and queries that shaped the data. As definitions evolve, capturing the original context becomes crucial for understanding the data’s implications.

Lastly, maintaining a change log is essential. Documenting alterations to data sets promotes transparency and allows researchers to track their own methodologies over time.

By following these guidelines, researchers can enhance the integrity of their data management practices, ensuring that their work is both reproducible and accessible for future study. The Portal Project serves as a testament to the benefits of systematic data management, showcasing how innovation in data practices can significantly advance scientific research.