In the evolving landscape of artificial intelligence, the security of autonomous AI agents has emerged as a critical issue for developers and organizations. With the increasing capability of these agents to execute code and interact with various systems, the potential for unintended actions or malicious breaches grows significantly. A recent exploration by the Greptile Blog offers insights into kernel-level sandboxing techniques that aim to create secure environments for these powerful tools, thereby mitigating associated risks.

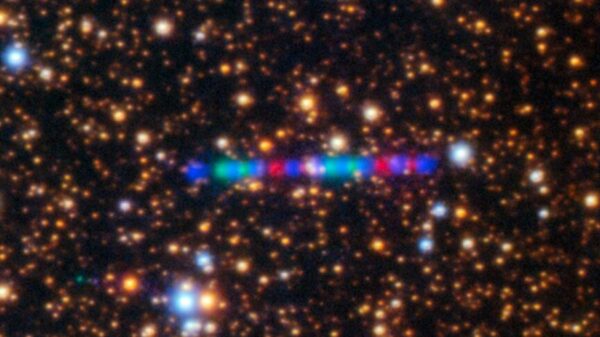

Sandboxing restricts an AI agent’s access to system resources, akin to confining a curious child to a playpen. The Greptile analysis specifically examines the ‘open’ syscall, a fundamental operation within the Linux kernel, illustrating how containers can conceal files and directories from AI agents. By manipulating namespace isolation and mount points, developers can establish virtual barriers that protect sensitive information, such as production databases and critical infrastructure.

Understanding Syscall Interception in Containerized Environments

The approach outlined in the Greptile Blog is firmly rooted in practical kernel mechanics. When an AI agent attempts to access a file outside its designated sandbox, the kernel’s syscall handling can redirect or deny the request. This system utilizes features like cgroups and seccomp filters to maintain security without compromising performance. Real-world examples using tools like strace demonstrate how these interceptions can uphold security while ensuring efficient operations.

Contributions from discussions on Hacker News highlight scalability challenges associated with kernel-level sandboxing. Commenters note that while this technique excels in low-overhead environments, its integration with AI workflows requires careful tuning to prevent latency spikes, particularly in scenarios involving agent-driven code reviews or automated deployments.

The Role of Namespaces in Agent Isolation

Namespaces play a pivotal role in the kernel-level sandboxing strategy. Linux namespaces allow for the creation of isolated views of system resources, including processes, networks, and filesystems. This ensures that an agent’s perspective remains tightly controlled. The Greptile Blog provides a step-by-step breakdown of how unsharing namespaces can create a sanitized filesystem for the agent, effectively concealing unnecessary paths and reducing risks such as path traversal attacks.

This technique reflects broader cybersecurity practices. An article from G2 emphasizes the importance of sandboxing for observing and analyzing potentially malicious code in isolation, a concept that is directly applicable to AI agents executing untrusted scripts derived from natural language prompts.

Industry adoption of these methods is already underway. Greptile’s platform, detailed in their Series A announcement, incorporates kernel-level safeguards to enhance AI code reviews, enabling the identification of bugs while restricting unauthorized data access. Similarly, innovations from GitHub’s awesome-sandbox repository focus on tools tailored for AI-specific sandboxing, promoting open-source collaboration.

Despite these advancements, challenges persist. Kernel vulnerabilities, such as those outlined in a Medium post regarding CVE-2025-38236, illustrate the necessity for continuous vigilance. Exploits targeting sandbox escapes through kernel flaws could undermine even the most robust setups, prompting experts to advocate for layered defenses that combine kernel hardening with user-space monitoring.

Looking forward, the integration of kernel-level sandboxing with emerging AI frameworks is poised to redefine the reliability of AI agents. For instance, The Sequence examines micro-container architectures like E2B, which adhere to similar principles to develop secure execution environments for AI tasks. These advancements indicate a shift toward proactive security models, allowing agents to operate under inherent constraints rather than relying on retrofitted patches.

As AI agents continue to expand across various sectors, from software development to autonomous systems, mastering kernel-level sandboxing will be vital. By leveraging detailed technical insights from sources like the Greptile Blog, engineers can strengthen their defenses, ensuring that innovation progresses without compromising security. This comprehensive approach not only minimizes risks but also enables AI technologies to thrive in controlled and predictable environments.