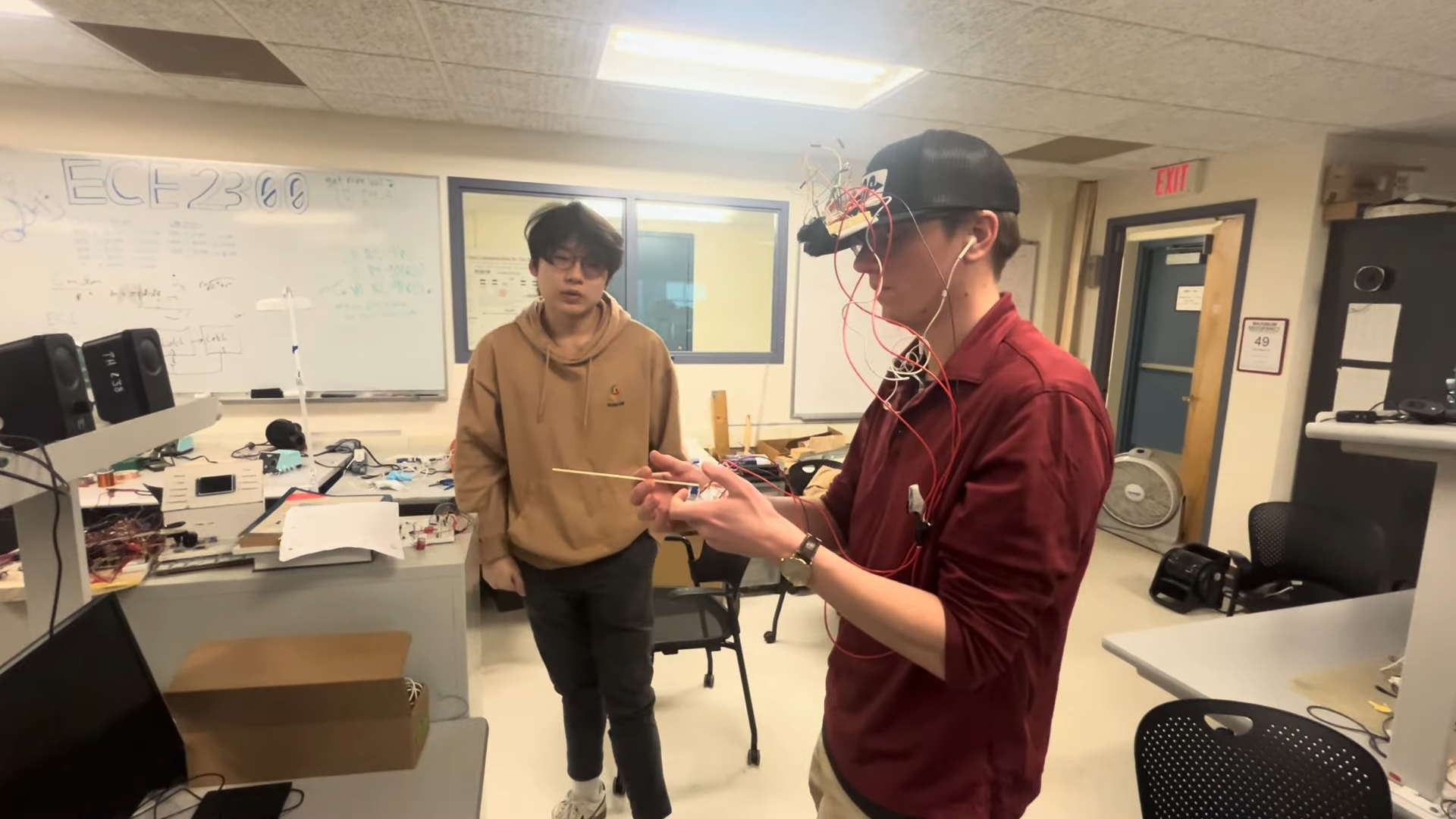

Students from the ECE4760 program at Cornell University have developed an innovative spatial audio system integrated into a hat. This project, led by Anishka Raina, Arnav Shah, and Yoon Kang, aims to enhance the user’s awareness of their surroundings through audio feedback, providing a unique approach to sensory augmentation.

The core of this project is a Raspberry Pi Pico, which works in conjunction with a TF-Luna LiDAR sensor to measure distances to nearby objects. The sensor is mounted on the hat, allowing the wearer to scan their environment by panning the sensor from side to side. While head tracking was not incorporated into the design, the user can adjust a potentiometer to inform the microcontroller of their facing direction during the scanning process.

Once the Pi Pico receives data from the LiDAR sensor, it calculates the proximity and location of nearby objects. This information is then transformed into a stereo audio signal that conveys the distance and direction of these objects to the wearer. The project employs a spatial audio technique known as interaural time difference (ITD), which helps the user discern the relative positions of obstacles around them.

This remarkable build highlights the potential of combining technology with everyday wearables to enhance human perception. The students’ efforts represent a significant step in the realm of sensory augmentation, pushing the boundaries of how individuals can interact with their environment. Similar projects have gained interest in the past, showcasing the ongoing exploration of innovative solutions in assistive technology.

Through this project, Raina, Shah, and Kang have not only demonstrated technical proficiency but have also contributed to the conversation around enhancing personal safety and awareness in our everyday lives. As wearable technology continues to evolve, the implications for individuals who may require assistance navigating their surroundings are profound.