The competition for dominance in artificial intelligence (AI) is intensifying among U.S. tech companies, driven by a belief in its transformative potential and fears of falling behind global rivals like China. This urgency often overshadows significant concerns about safety and the societal impact of rapid AI deployment. As the race accelerates, calls for a shift in approach to ensure that innovation aligns with human values are growing louder.

Techno-Utopian Visions and Reality

In a recent blog post, Sam Altman, CEO of OpenAI, articulated a vision of artificial superintelligence as a revolutionary leap forward. He described a future where robots manage supply chains, manufacture new technologies, and significantly impact industries. Tech leaders, including Elon Musk and Marc Andreessen, forecast a world where AI solves critical issues like disease and environmental degradation, promising a future of abundance. However, these aspirations come with a darker side; a potential transformation of society where human interaction diminishes as people retreat into virtual realms, relying on universal basic income funded by technology companies.

This techno-optimistic perspective echoes sentiments from the 1930s Technocracy movement, which advocated for governance by technical experts. Despite the optimism, critics argue that such a viewpoint neglects the complexities of human experience and the essential role of human agency in decision-making. The integration of social scientists and ethicists into AI development discussions is increasingly seen as vital to maintain this balance.

Challenges of Social Dislocation and Governance

The rapid advancement of AI poses significant risks, particularly in its potential to displace jobs. Predictions indicate that up to half of entry-level white-collar positions could disappear in the next five years, leaving many without clear pathways to new employment. While the Industrial Revolution allowed for gradual job creation, the pace of AI’s impact threatens to leave a void in the professional landscape, disrupting upward mobility and economic stability.

Moreover, the governance of AI development remains fragmented. International efforts, such as the Bletchley Declaration in 2023, aimed to establish safety standards for AI, yet the U.S. and UK declined to endorse subsequent commitments at the 2025 Paris Global AI Summit. The need for binding global regulations becomes increasingly pressing as the technology evolves. Currently, only two percent of global AI research and development focuses on safety, and just five percent addresses human alignment with AI’s objectives.

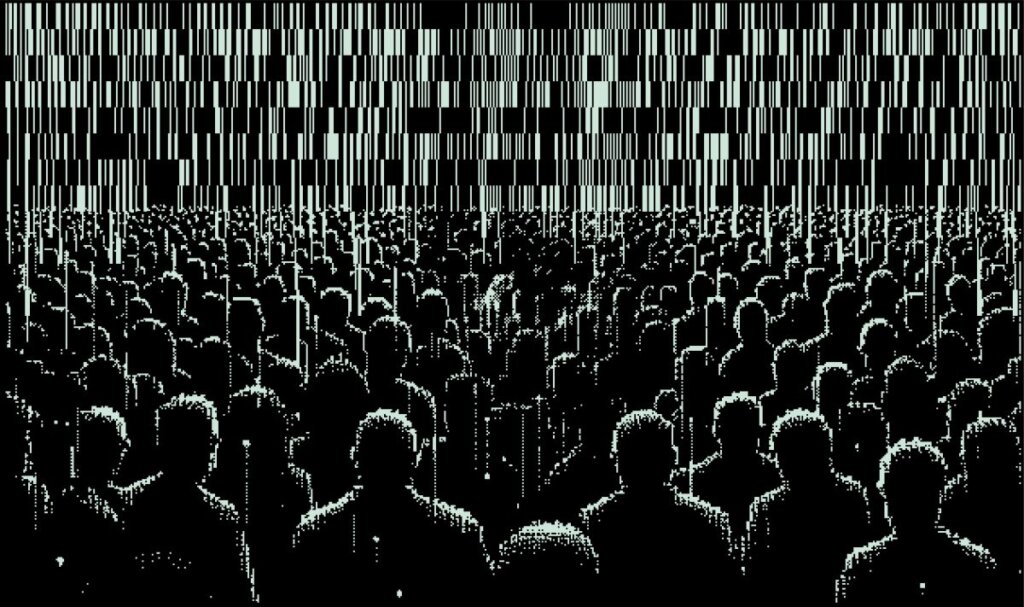

As industry leaders like Ian Bremmer describe the current landscape as “technopolar,” the role of major technology firms has shifted to resemble that of historical sovereign entities. These companies are influencing government policy and shaping public life, raising questions about accountability and the potential for unchecked power.

Despite the visible risks associated with AI, investment in its development continues to surge. Between 2013 and 2024, approximately $471 billion flowed into U.S. AI ventures, with projections of an additional $300 billion in 2025. This trend raises concerns about prioritizing speed over safety, as calls for accountability and oversight become increasingly urgent.

In light of these challenges, leaders in the AI field, including Dario Amodei, CEO of Anthropic, emphasize the need to redirect the course of AI development. The imperative is not to halt progress but to steer it toward ensuring that human-centered innovation remains at the forefront of technological advancement. As the global community grapples with the implications of these technologies, the future of AI and its impact on humanity hangs in the balance.