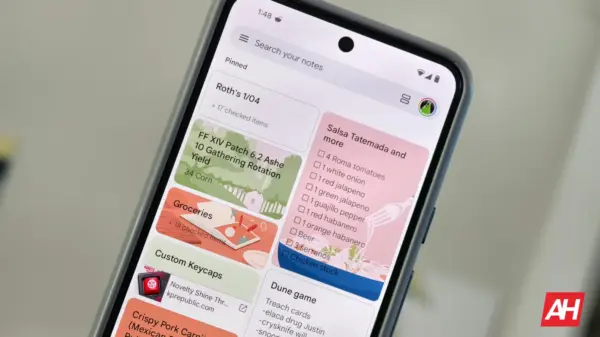

Recent experimentation has revealed a significant productivity enhancement by integrating NotebookLM with a local Large Language Model (LLM). This combination allows users to leverage the organized research capabilities of NotebookLM while maintaining the speed and privacy of a local model, leading to notable improvements in digital research workflows.

Enhancing Research Efficiency

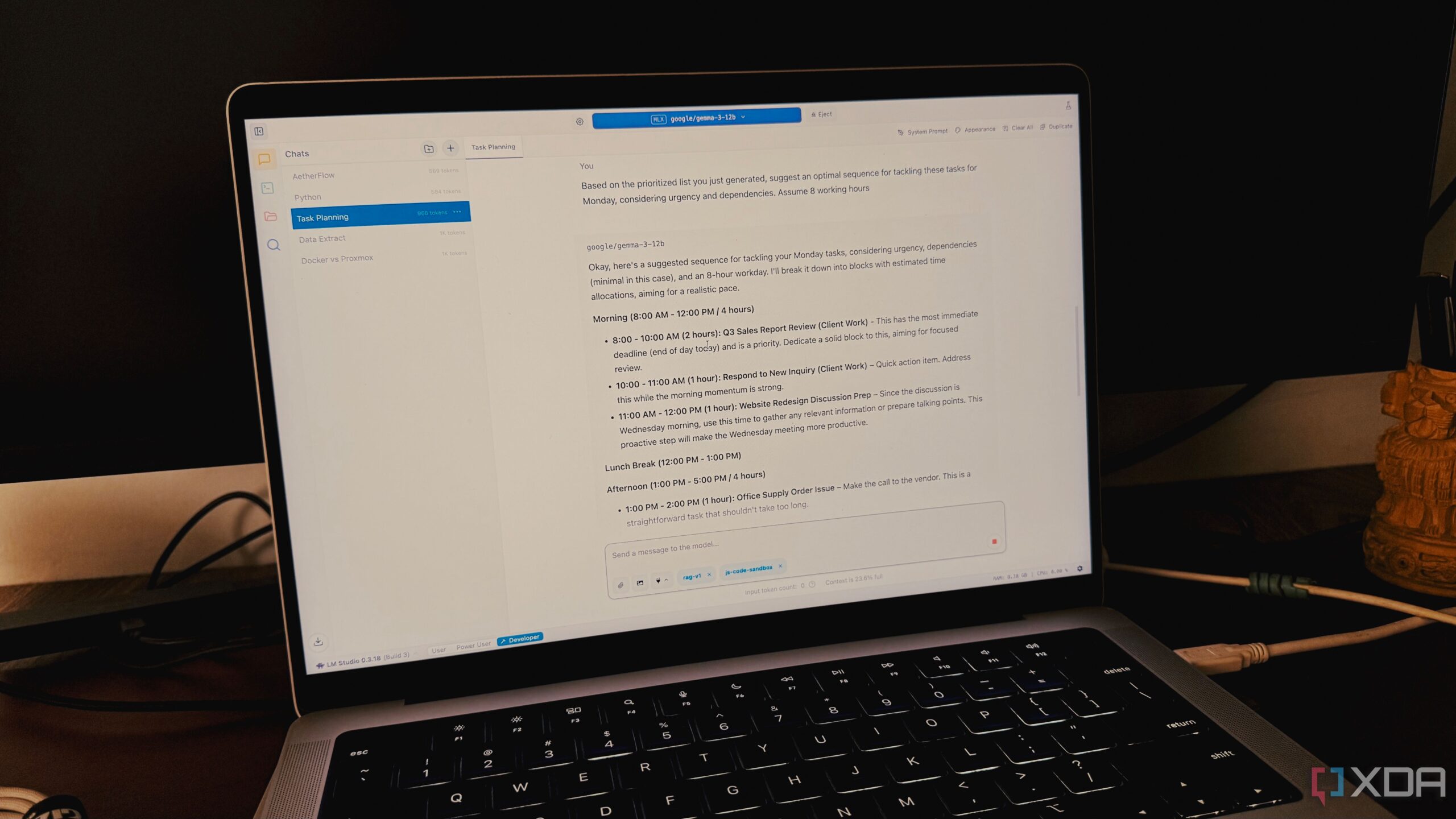

The challenge many face in handling extensive projects lies in balancing the need for deep context with the desire for complete control. NotebookLM excels at organizing research and generating insights from various sources, yet users often find themselves limited by its dependency on external data. In contrast, local LLMs, such as those run on LM Studio, offer unparalleled speed and flexibility, allowing for adjustments without incurring API costs.

To maximize productivity, these two tools can be effectively bridged. Initially, the local LLM is employed for knowledge acquisition and structuring, providing a comprehensive overview of a topic. For example, when tackling a complex subject like self-hosting applications via Docker, the local model quickly generates a structured primer that covers essential concepts such as security practices and networking fundamentals.

Once this overview is created, users can transfer it directly into their NotebookLM project. The integration allows NotebookLM to treat this structured information as a source, enabling users to query the combined data effectively. This method results in a knowledge base that is not only accurate but also uniquely tailored to individual research needs.

Transforming the Research Process

The efficiency gained from this hybrid approach is substantial. After inputting the local LLM’s structured overview into NotebookLM, users can ask targeted questions related to their research, receiving precise answers rapidly. Furthermore, the ability to generate audio summaries of the compiled research provides a convenient way to digest information while away from the desk.

Another invaluable feature of NotebookLM is its source-checking and citation capability. This ensures users can easily trace the origin of facts within their research. As a result, what once took hours of manual verification can now be accomplished in mere minutes, significantly streamlining the research process.

Adopting this combination of local LLMs and NotebookLM has transformed the way many approach research. Rather than relying solely on cloud-based or local solutions, users can now harness the strengths of both systems. This innovative approach marks a new era in productivity for serious researchers, providing a robust framework for managing complex projects effectively.

For those looking to optimize their research workflows, exploring the integration of local LLMs with NotebookLM may offer significant advantages. This pairing not only enhances control over data but also promotes a more efficient research environment, paving the way for deeper insights and improved productivity.